Okay, so I was eventually going to get round to this one at some time. Here's the real twist... I know nothing about social media. Okay, that's a bit of a lie/exaggeration, I know enough to know that I don't really have a clue. So I did what everyone else would do in my shoes... called a consultant.

Okay, so I was eventually going to get round to this one at some time. Here's the real twist... I know nothing about social media. Okay, that's a bit of a lie/exaggeration, I know enough to know that I don't really have a clue. So I did what everyone else would do in my shoes... called a consultant.I guess this post really starts some two years ago when I first joined Prop Data. Online marketing has come a long way from simply calling yourself an SEO by stuffing keywords, these days it's a full time commitment to the betterment of the internet (yeah, I'm fighting the crusade for the good guy). Okay, so I admit to spamming on occasion, but who hasn't? When I joined the company I knew that many things were changing online, web 2.0 wasn't just making websites easier to maintain and update but also making them a lot more interactive. While visitors could interact with the site, it's still just code, visitors needed interaction to work back to them. Enter the age of blogs and social media in general.

This brings us back to last Friday. Having joined Twitter a good long while ago I've been closely following other folk in the SEO, SEM and Social Media circles. While there are precious few in South Africa that claim to follow these trends, one chap Mike Stopforth has put himself out there. Replying to a Tweet he sent out a few months back offering a free consultation he agreed to join us and speak to us. Being Prop Data, the team were all over worked, understaffed - the usual. We changed the format up a little and it became a open discussion between Mike, the sales guys and myself.

The discussion was great. Broken down into some very simple points Mike did a great job of highlighting the points to consider and questions to ask before venturing forward on any social project. I guess many of these points we already knew, it was just a case of putting them into perspective. After all, there rarely is a point in doing something simply for the sake of doing it. Focus and result is the main point, as it always should be. Sometimes having a blog isn't a good idea when a fan page would make a lot more sense. Not everybody likes a particular product, but it may have many fans. Simple point, but I'd never thought of it that way. What can I say, I don't know social media.

I guess like so many other things IRL (that's "In Real Life" ;) it's not what you do, but how you do it. Social Media is an animal. You have to feed it and nurture it, if you don't it will turn and bite you. Those wishing to engage in Social Media, "Are you ready for that kind of commitment?"

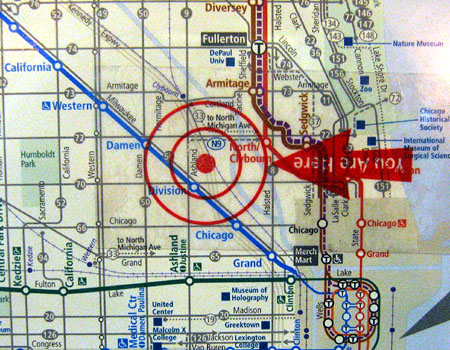

There are two types of sitemaps that you might employ on a website. First the HTML version which is intended to offer the average visitor to the website an overview of your website. The second is a machine readable XML sitemap (commonly referred to as a Google Sitemap) intended to inform a search bot of the pages found on your website. I'd always recommend the use of both - in various forms.

There are two types of sitemaps that you might employ on a website. First the HTML version which is intended to offer the average visitor to the website an overview of your website. The second is a machine readable XML sitemap (commonly referred to as a Google Sitemap) intended to inform a search bot of the pages found on your website. I'd always recommend the use of both - in various forms.

Well it's been some time since the Google killer

Well it's been some time since the Google killer

Links… aren’t we all a little tired about hearing about links? Don’t link to bad neighbourhoods, don’t link to link farms… don’t get links from bad neighbourhoods; don’t get links from link farms. Don’t buy links. Don’t sell links. Don’t have too many links on one page. Don’t let all the links pointing to your website have the same anchor text.

Links… aren’t we all a little tired about hearing about links? Don’t link to bad neighbourhoods, don’t link to link farms… don’t get links from bad neighbourhoods; don’t get links from link farms. Don’t buy links. Don’t sell links. Don’t have too many links on one page. Don’t let all the links pointing to your website have the same anchor text.

A while back the question came by as to what it was that I wanted to when I grew up. I just laughed and replied, I’m most likely never going to grow up, so there is no need to think about it! Going back to my first childhood though, I can recall wanting to play computer games for a living – didn’t we all? This got me thinking which, as most know, is quite a rare occurrence.

A while back the question came by as to what it was that I wanted to when I grew up. I just laughed and replied, I’m most likely never going to grow up, so there is no need to think about it! Going back to my first childhood though, I can recall wanting to play computer games for a living – didn’t we all? This got me thinking which, as most know, is quite a rare occurrence. You’ve built a state-of-the-art website expecting it to be your little nest egg. But the thought that runs through your mind now is how you are going to get people to see it. The first thought that comes to mind is to gain top rakings with major search engines and anyone else for that matter. This leads you to the next question, which is What keywords are you going to target?

You’ve built a state-of-the-art website expecting it to be your little nest egg. But the thought that runs through your mind now is how you are going to get people to see it. The first thought that comes to mind is to gain top rakings with major search engines and anyone else for that matter. This leads you to the next question, which is What keywords are you going to target?